Category Audio programming

Laban Movement Analysis in Unity. My take on transcribing LMA to C# and using it to create motion controlled instrument in Virtual Reality

Working with computer vision and motion controlled audio in web browser, using the JavaScript export feature of RNBO, part of Max MSP

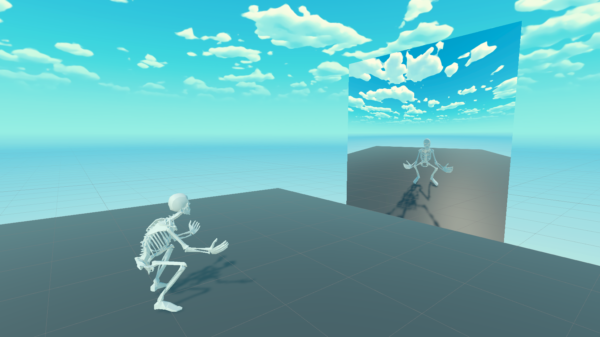

Unity implementation of computer vision based full body pose estimation working with Quest 2 and a single webcam

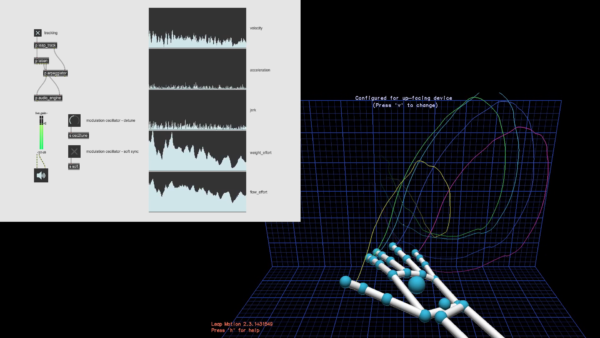

Laban Movement Analysis in Max MSP. The idea is to create an instrument in Max MSP and use data from Leap Motion controller to control it.

Gesture controlled instrument for collaborative music creation. This instrument is an online application that runs in browser. It is capable of tracking user’s hand motion using a webcam.

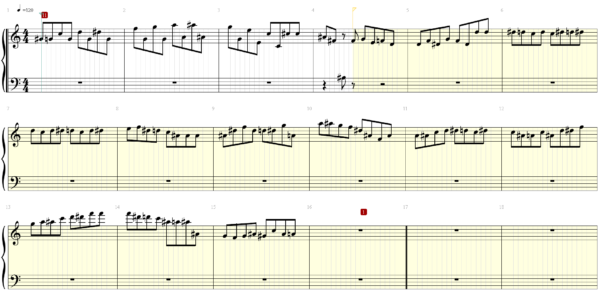

Example of using machine learning for music generation. I have used long short-term memory recursive neural network to create new Mozart compositions.

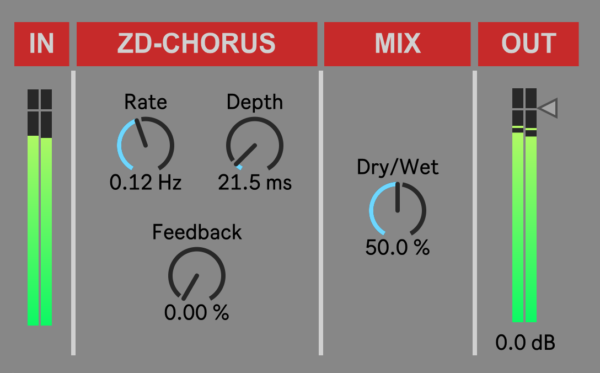

Simple, Juno inspired, stereo chorus in a form of a Max for Live audio effect device

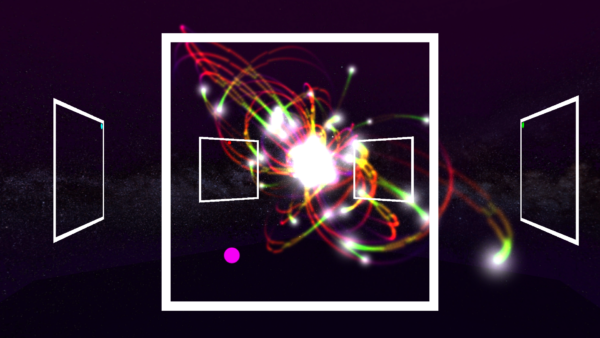

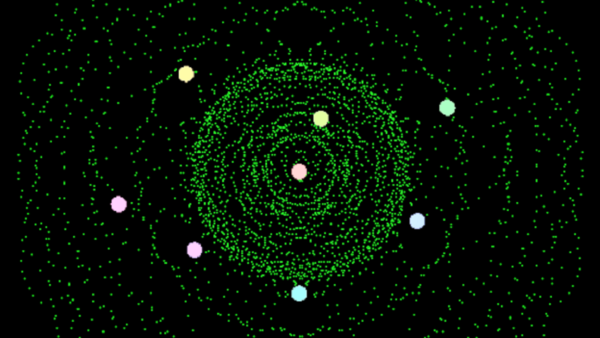

Flock of birds (boids) controlling a granular synthesis musical instrument programmed in Max.

Joystick sequencer – let’s you play chords, melody and bass line using no more than a regular game controller

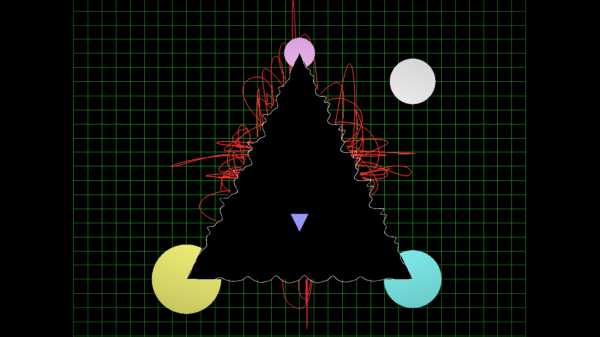

Vector synthesizer I’ve made in Pure Data. MPE controllable generative sequencer on board. Using Leap Motion to morph the wavetables and shape the sound.

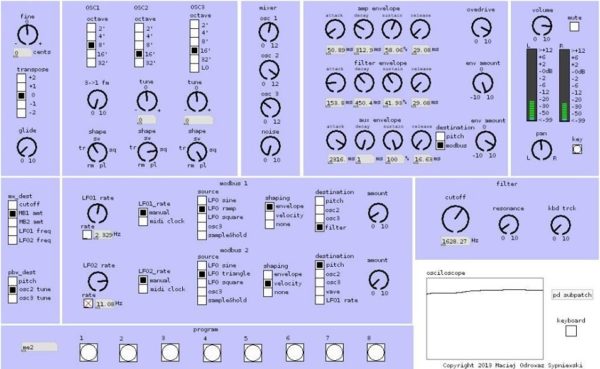

Classic subtractive synthesizer with 84 modifiable parameters, preset memory and complete MIDI control that I’ve programmed in Pure Data.