Since recently Max MSP features the RNBO addon. It allows for code export to various formats including web (JavaScript), audio plugins (VST/AudioUnit), Raspberry Pi and C++ among others. Since my interest include creation of dynamic audio application responding to motion I was very eager to try and use this new feature. I have worked with motion controlled audio in web browser before but now it seem to be even more accessible.

HandtrackFM

For my first project I have decided to created a simple audio generator. I wanted to make it so that the audio parameters are linked to hand position. The set of the motion controls resembles the features that we have used in the collaborative instrument project. I have decided to use Handtrack.js library. Audio generator is a simple frequency modulation synthesizer consisting of a single carrier and modulator set with tremolo effect. In order to make the project accessible I have decided to use computer vision. I have assumed that more potential users will have webcams rather than controllers like Leap Motion or IMU’s with gyroscope. Ability to host the application on a website has fuhrer increased the ease of use of the application. Users don’t event have to download and install anything, they can run it as soon as the web page loads.

I have connected frequency of the carrier, modulator and tremolo effect to X, Y and Z position of the hand. The source code is available on my GitHub and the live demo is available here.

FaceArp

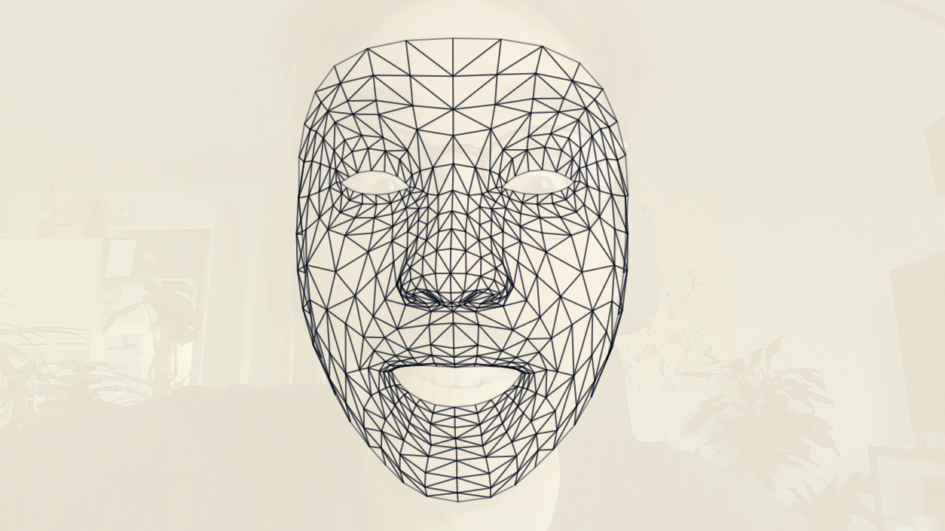

I came up with an idea for another project while working on the first one. I wanted to try working with face tracking and use it to control sound generator. The basic idea is similar to the first project but this time I have added more features. Among those features is support for MIDI input, keyboard support, visuals reacting to audio and more controllable parameters. In this project I have decided to use MediaPipe’s Face Mesh library. I have create a generative arpeggiator sequencer playing in the key and range determined by the user. User can control low pass filter cutoff, sequencer note probability, audio stereo pan and reverb send using his head. Chord root note is determined by the MIDI note input. User can also use computer keyboard to control notes. I have added the probability parameter to break the repetitiveness of the chord note sequence.

In addition I thought that adding the face mesh rendered over the camera preview would be an interesting idea for visuals, on top of that the structure of the mesh is reacting to the audio amplitude. It was also a pretext for me to test sending parameters from RNBO to JavaScript. I believe I took the inspiration for linking mouth opening to reverb send from my childhood. Specifically when as a kid I was playing a music on my phone close to my mouth I could get this phasing effect when opening a closing my mouth. Source code for this project is also available on my GitHub and the live demo is available here. I have tested both application and can confirm that they work in current versions of Chrome and Edge web browsers.

Leave a Reply